Test automation for start-ups

Quality assurance is an activity often overlooked by start-ups. Adding features attracts new customers and revenue, but testing doesn't seem to bring much immediate value, right?

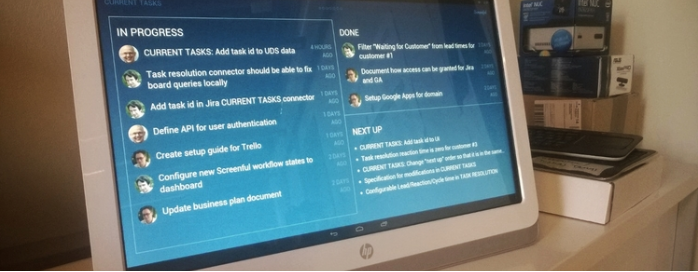

That was our initial approach to quality assurance at Screenful too. Naturally we have unit tests. We eat our own dog food. We have big screens in our office to show the progress of our projects and the performance of this web site. Our pilot customers give feedback and we use it to make our stuff better.

This approach worked, kind of. Visual bugs and annoyances were quickly spotted and fixed, unimportant statistics removed from the screens.

CURRENT TASKS on the Screenful office radiator.

Until we realised that a whole category of issues was going unnoticed. The data we were showing wasn't always accurate. Lead times were off, tasks missing and performance figures inconsistent. But if the numbers looked mostly plausible we, and our users, would be happy. We actually had plenty of unit tests for the individual components, but we didn't have any functional tests that would test the system end-to-end. So we had to stop and do a lot of time consuming, manual testing to get our product in shape. Which we did.

At that point the idea of automated functional testing started to look very appealing.

By a few lucky coincidences we met Kari Kakkonen and Nikolaj Cankar from Knowit, a testing professional services company. Kari and Niko wanted to offer an affordable and light-weight test automation solution specifically tailored for start-ups. They just needed somebody to test drive their product.

It was a perfect match.

Together with Niko we created a test suite using Robot Framework, a popular open source test automation framework. Writing tests with Robot is efficient because you can abstract away the technical details and focus on the concepts of the system under testing. Even non-programmers can understand and write test cases.

Still it was a really big time-saver to have someone who understands Robot do the initial set up and write the first tests.

The process started with test design. In our case that meant taking a look at the functionality and architecture of the system and deciding what we wanted to test. Not too difficult because we had a clear problem to solve. The test implementation we did on two levels. We wrote the low-level integrations to our proprietary data sources in Python and the high-level test cases using the Robot IDE. Although there are a ton of test libraries that you could use with Robot some 'real programming' is usually needed. Finally we integrated the test suite with our development environment, which meant hosting it on Heroku.

There were some surprises, too. Initially we thought it would make sense to create artificial, static data sets and run tests against them. Well, it turned out that in our case creating them would be a tedious and time consuming task. So we decided to test against live data instead. This means that we have to re-implement some of the processing logic as a part of the test suite, but this actually takes less time than building a dummy data set. As a bonus the bugs we find this way are always relevant. They appear on real data sets and therefore would be seen by our customers as well.

Now that the system is in place we can easily add a few test cases in each sprint and continuously improve the quality of our dashboards.

If you want to know more about Screenful just click to the web site or sign-up for a trial. I'm also writing a follow-up post about how to run Robot Framework tests on Heroku. In case you need a similar setup, just subscribe to our blog and get notified when it's published.

This article was written by Tuomas Tammi

Tuomas is the CTO and co-founder of Screenful, the company that is reinventing how businesses measure their performance. Screenful develops dashboards that are both actionable and beautiful to look at. You can follow Screenful on Twitter (@screenful).